Chess Engine

Chess Engine

My favorite personal project, one that I am extremely passionate about, and still constantly make improvements to today. Since chess is a game that I actually play often, I find it fascinating when I can inject some of my own strategy and knowledge into my program, through chess specific heuristics and optimizations.

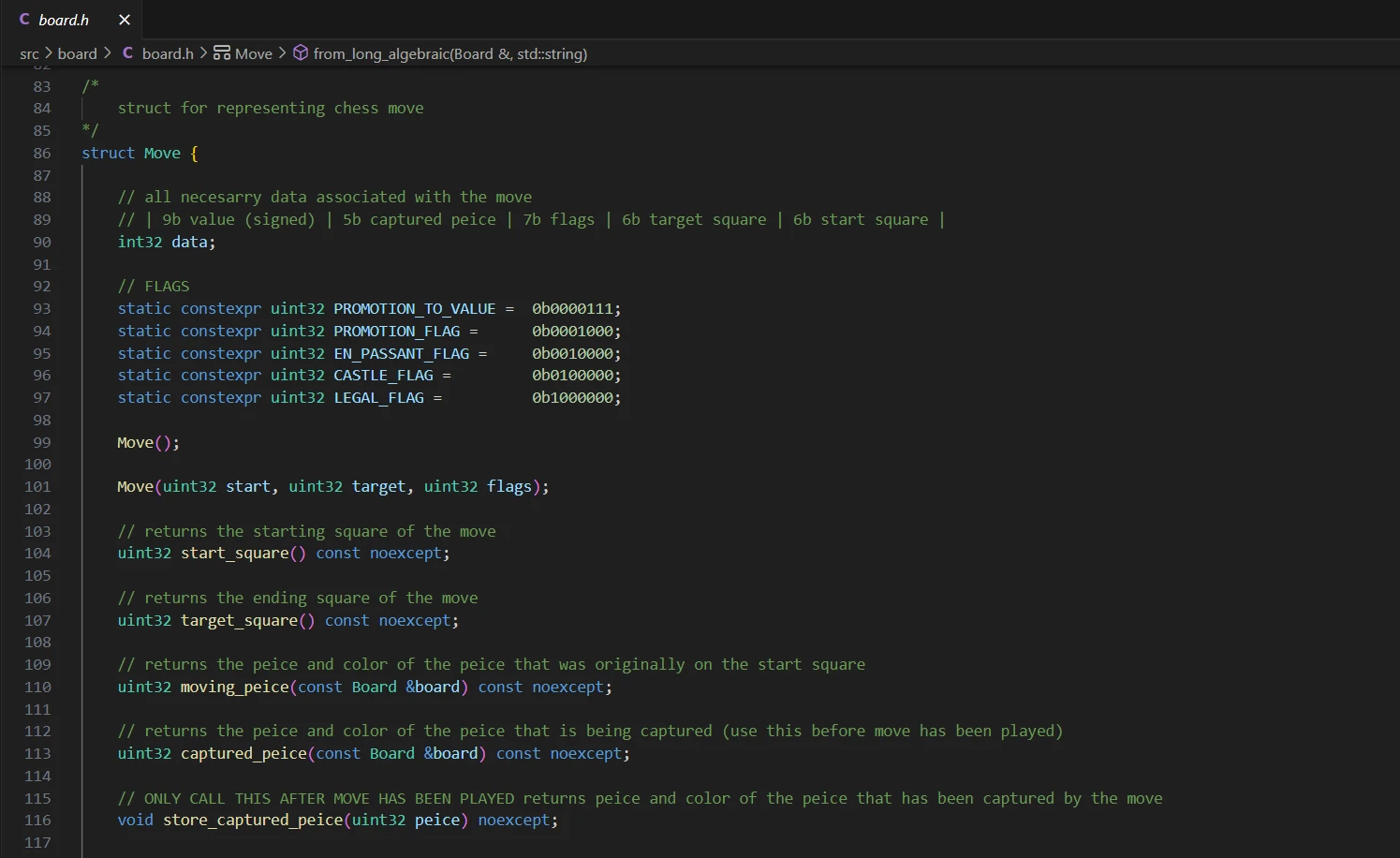

One of my favorite challenges was implementing all of rules of chess when programming the move generation framework. To work out all of the edge cases meant testing on dozens of positions.

This was my first time using a low level programming language like C++. I enjoyed having the freedom of programming styles and memory control. I recently began rewriting the entire codebase, and I decided to switch from Object Oriented to Procedural programming styles, as well as leveraging more low level optimizations such as bit-boards. The new version also runs in its own process, using inter-process communication and the UCI interface to transmit moves.

Implementing the traditional minimax algorithm was easy and I was happy to see that the engine was already quite tactical. Building its positional knowledge allowed me to inject my own heuristics into the evaluation function. I spent a while experimenting and implementing schemes such as PV-search: optimizing the alpha-beta pruning with move ordering and a transposition table lookup.

I created a front end application, also written in C++ with the SFML library so that my friends could play against my engine. I was extremely satisfied that none of my friends were able to beat it.

SIDE PROJECT: For a project in my Computing for Data Analysis class, I researched and implemented a miniature version of Stockfish's NNUE neural network evaluation function. It is a specialized network architecture with quantized activations and an efficiently updated extremely dense first layer for fast evaluation on a CPU. I had fun using low level special vectorized CPU instructions in my source code (1st gen) in order to implement the network from scratch (links: more info | training code).

As I am now interested in a machine learning approach to chess, I incorporated new functionality for training deep neural networks into my rewritten code base. I wrote functions to parse the specialized binary training data format into a custom multi-threaded data loader which can be utilized by my python code.

Recently I trained a few deep neural networks (link: more info), and my current mission is to incorporate them into my engine design. The challenge I am facing has to do with hardware. The engine runs sequentially on the CPU, but the neural network runs fastest in parallel on the GPU with batches of data. My goal is to implement a new search algorithm: Monte Carlo Tree Search. This is a probabilistic algorithm which can run many simulations in parallel.

Come back later and wee what I've accomplished!